Information Operations as a Means of Cognitive Superiority - Theory and Term Research in Bosnia and Herzegovina

(Volume 24, No. 2, 2023.)

Author: Robert Kolobara, PhD, Universty Mostar, Mostar, Bosnia and Herzegovina

DOI: https://doi.org/10.37458/nstf.24.2.3

Original scientific paper

Received: November 25, 2022

Accepted: May 7, 2023

This article is english language version of article https://www.nsf-journal.hr/NSF-Volumes/Focus/id/1469.

Ovaj je članak engleska verzija članka na engleskom jeziku dostupnom na https://www.nsf-journal.hr/NSF-Volumes/Focus/id/1469

Summary: The goal of today's information operations is to overthrow reality, i.e. to establish an imposed perception and understanding that lead to the same thinking, which will ultimately cause the desired action. Therefore, the mass media no longer transmit only interpretations of past events or model a narrative, but also teach the target audience how to think correctly, thus producing a behavioral outcome of a political and social character. Consequently with the political-security evolution, communication has become a means of a new hybrid war where the goal is cognitive superiority as the ultimate tool of subjugation and rule.

This paper deals with the theory and research of the term information operations and cognitive superiority in Bosnia and Herzegovina through a sociological field survey of residents with the aim of inductively determining the level of knowledge and awareness of the public about them.

This paper deals with the theory and research of the term information operations and cognitive superiority in Bosnia and Herzegovina through a sociological field survey of residents with the aim of inductively determining the level of knowledge and awareness of the public about them.

Keywords: Information operations, cognitive superiority, social networks, digital neurotoxicants, invasive information.

Introduction

Various authors write that there have been many developments in smaller intellectual centers around the world for the potential development of cyber control of basic thought processes for large segments of the world's general population. Authors like Kelly point out that the central axis of our time is endless accelerated change. The sum of human knowledge is accelerating exponentially as its access and infrastructure change, point out Hartley and Jobson.

„We use the word 'noosphere' to refer to the total information available to humans. The word 'technium' on the other hand refers to referring to technology as a whole system. The accelerated change in technium is obvious. New technologies emerge before we have mastered the old ones. Dangerous exploitation is simpler due to more and more enabled machines and the reduction of barriers between expert knowledge and end users. We are even witnessing the emergence of new forms of cognitive. We have made progress in understanding and increasing human cognition. We must now add up the impact of artificial intelligence (AI) and cognitive objects, processes and environments“ (Hartley and Jobson, 2021).

Since the Treaty of Westphalia in 1648. the Westphalian Order defined nation-states as the main units of the geopolitical system. In practical terms, this meant that discussions about warfare and major conflicts began at the national level (McFate, 2019). But in today's combat environment, entry level starts with the individual. In the opinion of the profession, the rapid rise of information as power also brings an urgent imperative for cognitive superiority.

Back in 1996. Manuel Castells, a distinguished sociologist, made a bold prediction in which he claimed that the Internet's integration of print, radio, and audiovisual modalities into a single system would have an impact on society equal to the establishment of the alphabet (DiMagio, 2001, 309). And indeed the rise of social networks has changed the nature of communication more than the advent of satellite television, twentyfour hour news and all modern television news programming combined. The symbiosis of individual connectivity and real-time mass broadcasting has seen virality regularly trump truth in the ultimate competition for attention.

The result is the emergence and enthronement of numerous psychological, or algorithmic manipulations, in the cognitive sphere of the human organism through a virtual program that seems to never stop. In this context, Singer and Emerson ingeniously observe: “if you're online, your attention is like a piece of contested territory, around which conflicts are being waged that you may or may not realize are going on around you. Everything you watch, like or share represents a tiny ripple on the information battlefield, privileging one side at the expense of others. Your online attention and actions are therefore both targets and ammunition in an endless series of skirmishes. Whether you are interested in the conflict or not, they have an interest in you.” (Singer and Emerson, 2018, 22-23). Social networks have become massive information honeypots ("honeypot" - an intelligence term referring, most often, to a female lure that is irresistible to opposing agents).

The vast digital space forms its own ecosystem of billions of people, where the physical and virtual merge into one, which then produces proportional consequences on the cognitive, physical, and legal aspects of an individual's life. What was once television in terms of the primary source of information and shaping public opinion, is now the Smartphone. The difference in the destructiveness of the impact is the same as comparing stick dynamite and a nuclear bomb. It is simply another dimension of influence, the evolution and emergence of a new agresive type of information that has no empathy whatsoever for the former, information that in this paper we call invasive.

Cognitive superiority

Information has become a commodity. The struggle over the control of information and knowledge is great (Aspesi & Brand 2020). Information warfare is a generic term. It is part of both conventional warfare and non-conventional conflicts (Hartley, 2018). It is also an independent operation (Kello, 2017). Because humans are the ultimate target, all information (including that stored in the human brain and our learning) is at risk, and modes of engagement include the Internet and other technologies such as television and non-technological modalities such as non-verbal communication. Every aspect of our complex humanity is measurable and can be targeted. There is a hierarchy of goals that extends from our plume and trace on a chemical level, through our psycho-social needs and drives through our irrational aspects to our educated expanded state, point out Hartley and Jobson.

In his book The Virtual Weapon, Lucas Kello points out: "This, then, is the main transformational feature of the security of our time: Information has become a weapon in the purest sense" (Kello, 2017).

The conflict matrix of our age of accelerated paradigmatic change, including new forms of cognition, requires polythetic, multipurpose strategies, talent from new and transdisciplinary knowledge communities, and minds that are ready to meet the unexpected. Advances in the science of influence can use captology (computer-assisted persuasion) and narratology on time scales ranging from regular news releases to shi, according to Kello. Ajit Maan claims that the narrative war is not the same as the information war, but that it is a war over the meaning of information (Maan, 2018). Narratives describe the meaning of facts. Narratives have always been central to persuasion whose tools of persuasion are manifold and powerful. Narrative warfare consists of a coherent strategy that uses genuine and fake news as tactics. The currency of narrative is not truth, it is meaning, like poetry (Maan, 2018).

The idea is to create a story that leads to the desired conclusion. The story doesn't have to be true, but it has to resonate with the audience. It is effective because it bypasses critical thinking and shapes the identity of the audience, and thus their beliefs and actions. (Martin & Marks, 2019). Cognitive science has shown that countering lies by repeating the word 'no' (or some other negative) actually has the opposite effect. This reinforces the false statement in the audience's mind (Maan, 2018). In order to counter false or mainstream opinion, we need to understand the assumptions, prejudices of audiences, social membership and identity as they are currently constructed. It is often best to avoid a direct counternarrative and instead use a larger metanarrative to reframe or encompass the opposition. In general, it is important to actively involve the listener. Offer a bigger, better, stronger, smarter alternative way of understanding, identifying, acting, Maan points out. „If the persuader is seen as an authority, as them or 'not one of us' by the persuadee, it can be helpful to begin with a gentle self-denounce to ensure you are on the same level with the audience as a step towards common ground“ (Berger, 2020). ).

These conflicts connect enormous rifts along time and the most difficult dimensions from the nanosecond before an electron arrives in a swift war to the psychological seconds before we begin to behave physically, and all through the dynamics of the so-called killing chain, up to shi, the oriental diacrinic strategy of the present that creates an impact in the future that can be measured in decades or centuries (Brose, 2020.).

The US Department of Defense currently lists five domains of military conflict: land, sea, air, space, and cyberspace (Chairman of the Joint Chiefs of Staff, 2017). But the sixth domain, cognitive, de facto exists but is only now beginning to be recognized. „When the cyber domain is reviewed and analyzed, it is quickly concluded that it is a big but not final step towards greater power, power over cognitive superiority. This cognitive domain includes complementarity which is part of each domain individually but also a separate whole in the making (which is not just the sum of the other parts) which makes up the sixth domain of warfare. It refers to new forms of cognition of the never-ending exponential growth of the sum of human knowledge, new communities of knowledge and informational approaches, simultaneously shaping trust, social memberships, meanings, identity and power“ (Hartley and Jobson, 2021.)

Kinetic superiority is no longer a sure guarantee. Now with multiple different weapons and methods hidden behind multiple layers and their "residence and name," we must have cognitive superiority (Ibid.). People's goals and assumptions, their differences and perceptions and misperceptions about them are part of the cognitive domain. Samuel Visner said that the battlefield of influence in cyberspace is an instrument of national power, adding that we look at cyberspace in many ways as we look at maritime property, where some countries claim parts of the sea as their territorial waters. If the goal is cognitive superiority, it is critical to understand the adversary's cognitive domain from the global view to the tactical level, Visner believes.

The battlefield for cognitive superiority includes complex adaptive systems and systems of systems (SoS), with intelligent nodes, links, signals and boundaries, digital, hybrid and human. Visner's vision of cyber security includes safeguarding information, information systems and information technology and infrastructure, and achieving results in cyberspace that you want (not what someone else is trying to impose on you), i.e. safeguarding a part of sovereign space - cyberspace (Visner, 2018).

In an interview with CNBC during the conference in Davos 2020, the CEO of Palantir said that within five years, the country that has superior military artificial intelligence will determine the rules of the future (Karp, 2020).

Cognitive superiority is a relative attribute - sustained better thinking, faster learning and superior access to information than opponents. Fortunately, this does not require us to be individually smarter than each of our opponents, but requires us to be collectively smarter, according to Hartley and Jobson.

According to them, the bases of cognitive superiority are:

- a vision and grand strategy for an ever-increasing arc of cognitive conflict (with a commitment to cognitive superiority at the level of the former National Manhattan Project), including military artificial intelligence/machine learning – quantum superiority;

- talent, the best and brightest;

- lifelong learning and developing minds to be ready for the unexpected;

- favored access to the border of science and technology (Eratosthenes' affiliation);

- personalized systems of adaptive learning for adults, which use digital and traditional pedagogy with superior access to information;

- the superiority of the fascination of influence as part of new knowledge about the vulnerability and potential of man; - cyber security with resilience (defensive and offensive);

- knowledge of people, objects, processes and the environment in order to deal with new forms of knowledge that appear (Cf. Hartley and Jobson, 2021, 23).

The media has created an environment that encourages individual attacks on other individuals. Furthermore, there are traditional corporate activities that seek to advance their goals through influential operations. Moreover, our networked system creates cognitive demands that most users are not equipped to handle. When the computer was represented by large machines, tended by assistants in white lab coats, users could certainly remain ignorant of the requirements of the technology. Now, every personal computer, smartphone, smart TV or other device requires configuration and care by the owner, who is not equipped with a white lab coat or the knowledge and experience to meet their requirements (Rothrock, 2018). Together, these actors create an environment of constant conflict.

According to Rothrock, 76% of respondents to a study reported that their computers were compromised in 2016, while the figures for 2015 were 71% and 62% for 2014 (Rothrock, 2018). This means that any system should expect to be compromised – successfully attacked, despite having good defenses, Rothrock concludes. He adds that we need to defend several different layers and develop resilience in order to recover and recupered from a successful attack. Resilience is "the capacity of a system, business, or person to maintain its core purpose and integrity in the face of dramatically changed circumstances" (Rothrock, 2018).

The rise of artificial intelligence and machine learning, ubiquitous surveillance, big data analytics and the Internet has advanced academic research into what we call influence, or how that influence can be converted into effective behavior change. The emergence of mass Internet connectivity is a forecast that brings countless benefits. While these benefits are evident, the increase in the areas of vulnerability to cyber attacks is numerous. Barriers to entry into cognitive warfare are greatly reduced, including in the cyber and biological warfare domains. Quantum computing is leading to incredible scientific progress, but stolen encrypted data that currently has little value will have great value in the future due to the possibility of retroactive decryption. According to Jeremy Kahn, artificial intelligence "will be the most important technological development in human history" (Kahn, 2020).

Kai-Fu Lee describes his view of machine learning and artificial intelligence in his book AI Super-Powers. Quantum computing, communication and senses are in their embryonic stages here. As they mature, we can expect major disruptions in digital security. Current security processes are expected to be easily defeated by decryption. Encrypted files and communications stolen today will be decrypted (retrograde decryption) in the future, Lee claims. The full extent of its coming impact is still unknown.

„All of this fits and blends with the new science of persuasion. The conflict is more complex, multi-domain, trans-domain, polythetic, multi-order, more rapidly adapting and more patient than any previous conflict in history. We face opponents who join the fight without restrictions and without rules. The exponential increase in the sum of human knowledge and its application in technology led to the appearance of complex adaptive systems that we call cognition. For example, we are now discussing cognition in machines, where its quality is the topic of conversation, not its existence“ (Hartley and Jobson, 2021, 10)!

The science of influence/persuasion is continuously being transformed armed with new methods and metrics of sensing and experimenting and targeting the human hybrid cognitive. Using these methods along with addictive technology, persuasion is increasingly important to achieve power for statecraft, computer propaganda, marketing, lobbying, public relations, and narrative and memetic warfare using "social media slogans, images, and videos" (Donovan, 2019).

Persuasion (influence) is ubiquitous in all circumstances of human endeavour. It differs in combinatorial complexity, can be visible or hidden, and is constructed for immediate use and delivered urgently or with patience for the long game. Overlapping waveforms of influence come on all timescales from the immediate to the long game and can be wrapped in different packages, truth, deception, perfidy, coincidence with shi opportunity discernment. Shi is deception that involves influencing the present as part of a larger or grand strategy to influence the future at an opportune time, often for a long-term zero-sum game (Pillsbury, 2015).

In an age of mass and personalized surveillance, digital social networks can be personalized and "optimized for engagement," using "flashy" news, channeling attention to messages of affirmation and belonging, and messages of rage toward preconceived enemies, for affiliative or dissociative ends. Armed with conditional probabilities (powered by machine learning), AI suggestion engines are powerful persuaders (Polson & Scott, 2018). It doesn't take an authoritarian state to turn a neutral network to evil intent. Anyone can build and train it, using free, publicly available tools. The explosion of interest in these systems has led to thousands of new applications. Some can be described as helpful, others as strange, while some can rightly be described as terrifying, according to Singer and Brooking. Just as they can study recorded speech to infer the components of speech - pitch, rhythm, intonation - and learn to imitate the speaker's speech almost perfectly. Moreover, the network can use its voice mastery to approximate words and phrases it has never heard. With a minute-long audio recording, these systems could be a good approximation of a person's speech patterns. With a few hours, they are essentially perfect (Singer & Brooking, 2018). The largest digital platforms can gather and devote attention on a global scale. Recent advances in combinatorial persuasion, armed with artificial intelligence, augmented with personal and group metrics, can make many individuals and masses more inclined to follow through on suggestions. The determined can become obedient. Technology can speed up the decision-making process and thus change the probability (Ibid.).

A fundamental human bias is our default truth or initial assumption of truth. We also have the opposite "open alertness" or reactance that we must overcome in order to move away from our initial opinion or be convinced (Gladwell, 2019.). Fake news is both a product of information conflict and a tool in information conflict. We can define them as fictional information that mimics news media content in form but not in organizational process or intent. Social media has facilitated the spread of fake news, and the appeal of conspiracy theories has fueled the fire for even greater penetration. Guadagno and Guttieri state that motivated belief (confirmation bias), emotional contagion, and delusions support acceptance of fake news. (Guadagno & Guttieri, 2019).

Since people are used to receiving information from the media, they tend to adopt it uncritically (including fake news). Where there is conflicting information, people prefer that information that confirms their existing attitudes and beliefs, they are more inclined to "open" and adopt such news and give primacy to it at the expense of those with which they disagree.

In the book Weaponized Lies, Levitin claims that: "We have three ways to absorb information: We can discover it ourselves, we can absorb it implicitly, or it can be explicitly told to us" (Levitin, 2016). We have a limited reality, our discovery of new knowledge is limited by our own preconceptions. For example, when children play computer games, they follow the rules of the game, whether the rules are a valid representation of reality or not. The more realistic the game seems, the more likely we are to absorb lessons that we will then apply in reality. This means we are vulnerable to someone creating rules to suit their intentions and desires. The same applies to books that often depict human stereotypes and we tend to adopt these stereotypes in our thinking process. When we are told something explicitly, we have the opportunity to believe it or not. But the supposed authority of the source can influence our decision. Fake news creators can sway our choices by imitating or discrediting authoritative sources, concludes Levitin.

Lies have been a part of human culture for as long as records have been kept. They are attacks on the noosphere (total information available to humanity). However, our current (seeming) insistence on euphemisms, such as counter-knowledge, half-truths, extreme views, alternative truths, conspiracy theories and fake news in place of the word "lies" and the disruption of our education system, with respect for individual critical opinions, has lowered the bar for lies that can now be weaponized (Cf. Levitin, 2016). Such ammunition consists of stories, words, memes, numbers, pictures and statistics. Featured topics include deepfakes (deepfake - a video of a person in which their face or body has been digitally altered to look like someone else, usually used maliciously or to spread false information, news, images, sounds), associative decoding (inserting false memories), meaning platforms , serenics, knowledge of the dynamics of the spread of information versus the spread of behavior or the spread of violence, in traditional and digital systems (Centola, 2018). Armed lies deliberately undermine our ability to make good decisions.

The scale and frequency is indicated by the estimate that more than half of web traffic and a third of Twitter users are bots (Woolley & Howard, 2019). "Twitter falsehoods spread faster than the truth" (Temming, 2018). This danger led to the World Economic Forum in 2014 identifying the rapid spread of disinformation as one of the 10 dangers to society (Woolley & Howard, 2019).

Schweitzer says that the motive of the Universe is connection and claims that this is so on the quantum level, physics, chemistry, biology, sociology and in our traditional and digital networks. As part of this motive, battles of conflicting ideas have always taken place along the human scale from dyadic relationships to the highest levels of national, international politics and statecraft. The ascending recasting of information as power neither negates the wisdom of Sun-Tsu nor the strategy of von Clausewitz. But what it does is transform them into a complex matrix where the only restrictions are the laws of physics, access to information and the limits of the cognitive, concludes Schweitzer.

"No, sir, it's not a revolt, it's a revolution," was the answer of the Duke of Rochefoucauld to Louis XVI after the storming of the Bastille (Walton, 2016). „What we are experiencing is an information revolution, not a revolt. The distinction is that change, paradigmatic, transformative and accelerating, is taking place worldwide. Just as events in history must be understood in the matrix (conditions) of their era, today's information conflicts must be understood in today's matrix. Our matrix is one of accelerated change within our surrounding complex adaptive systems and accelerated change in the very nature of man as a biological, psychological, sociological, technological and informational being in the making. The revolution is in the technium (technology as a whole system), in the noosphere (the totality of information available to humanity), in man and in our knowledge of man, including our aspects of predictability and systemic irrationality“ (Ariely, 2009).

The recent past has shown us fundamentally important changes. Scientific achievements with the help of experimentation and big data analysis have converted persuasion from the position of art to a combination of art and science. Computer technology has yielded increased processing speed and capacity, increased both local and remote memory, and increasingly powerful software applications. Total computing power is increasing by a factor of ten every year (Seabrook, 2019). The Internet has connected the world, and artificial intelligence and machine learning have begun the process of restructuring many processes of civilization. Together, these changes make surveillance and control technologies ubiquitous.

From all these changes invasive information was born that helps:

1. States in the control and supervision of their citizens, the implementation of military-security-police operations, campaigns and activities;

2. Which controls the axis and landmark of economic behavior, marketing and production;

3. And finally, political entities that, with the help of invasive information, create their election campaigns, influence campaigns and implement all kinds of reforms within society, the state and organizations, whether national or international.

What is invasive information and how do we define it? Invasive information is a term that refers to the sum of ubiquitous surveillance and control information collected, which includes public and private surveillance cameras (movement and contacts), monitoring of overall online behavior and activities (socializing, shopping and interests), monitoring (audio and visual) in real time and space via smart mobile devices, satellites and drones and any other collected information resulting from the mentioned measures and actions. The very idea that you are today, willingly or unwillingly, under twenty-four-hour surveillance indicates the fact that someone or something knows everything about you, your basic characteristics, what you like and what you don't like, who you hang out with, where and when you move and live , where, what and how you buy, what are your political, religious, nutritional, technological, sexual, social, health, entertainment, sports and any other tendencies, weaknesses, potentials, habits and preferences, and what you say, write and listen in real time and space! Everything remains recorded and stored. What was previously only partially, for a certain period of time and with considerable effort and expense (with a court order) collected by agents of the intelligence services about individuals, now with ease and with the individual's own consent, technology companies do it many times better, more extensively and more successfully, and time continued.

The paradox is that we have turned into our worst enemies by helping "Big Brother" to monitor, share and collect data and information about ourselves. From posts about anything and everything to selfie photos that often go to useless extremes, the hunger of the human psyche for acceptance, self-aggrandizement and promotion and pursuit of pecuniary interests puts us all within reach and subjection of this weapon.

The reach of artificial intelligence and machine learning in symbiosis with state systems of general and ubiquitous surveillance represent a terrifying aspect of future life, or control over it. The justifications are always that honest law-abiding citizens have nothing to fear and worry about, with the fact that it is taken for granted, that is, it is assumed that the laws are always correct and just, that is, that the government is good and infallible. People easily forget that on the basis of the law they went to concentration camps and that throughout human history they were legally killed, imprisoned and kidnapped on the basis of the law, all under the pretext of the legitimacy of the current government and the dogma of its infallibility and correctness. We saw the last examples of universal madness, irrationality and human stupidity during the Covid-19 pandemic, when such totalitarian measures limiting basic human rights and freedoms were introduced, due to wrong assumptions, ignorance and narcissism of the rulers, and the effect on health preservation was weak or at best imperceptible in relation to the end result of the virus campaign. The ban on leaving the country, closing the bank accounts of protesters, the violence of the repressive apparatus against them and the prohibition of protests against the government and the use of the army in the streets, no, this is not Belarus, but Canada. Which shows the inversion of values and societies that have been proud of freedom and democracy for years. The truth has "changed" beyond recognition, to the point of absurdity where any reference to facts is declared subversive, a threat to the official order, and whoever says it publicly is dehumanized and his credibility is destroyed.

Social networks

Today, when secrets generally have a shortened lifespan in an environment "where everything is true", information wants to be free more than ever. In Mobile Persuasion Fogg said "I believe that mobile phones will soon become the most important platform for changing human behavior" (Fogg & Eckles, 2014).

Singer and Brooking (LikeWar - The Weaponization of Social Media, 2018.) write that many online activists have learned the hard way that social media is a shifting political battleground where what is said, shared and written carries real-life consequences. Often these restrictions are wrapped in the guise of religion or culture. But it's almost always really about protecting the government. The Iranian regime, for example, keeps its internet clean of any threats to "public morality and chastity," using such threats as grounds for arresting human rights activists. In Saudi Arabia, the harshest punishments are reserved for those who question the monarchy and the government's competence. A man who mocked the king was sentenced to 8 years in prison, while a man in a wheelchair received 100 lashes and 18 months in prison for complaining about his medical care. In 2017, Pakistan became the first nation to execute someone for online speech after a member of the Shiite minority got into an argument on Facebook with a government official posing as someone else. More than religion or culture, this new generation of censors relies on appeals to national strength and unity. Censorship is not for their sake, these leaders explain, but for the good of the country. A Kazakh man who visited Russia and criticized Russian President Vladimir Putin on his Facebook page has been sentenced to three years in prison for inciting "hate". A Russian woman who published negative stories about the invasion of Ukraine was given 320 hours of work for "discrediting the political order".

The Kremlin's idea is to own all forms of political discourse, not to allow any independent movements to develop outside its walls, writes Peter Pomerantsev, author of the book Nothing is True and Everything is Possible. A more visible vehicle for this effort is Rossiya Segodnya (Russia Today, or RT), a news agency founded in 2005 with the stated intention of sharing Russia with the world. "When government authorities struggled to stop the fervent democratic activism that spread across Russian social media after the color revolutions and Arab Spring, a group stepped in to pull the plug, praising Putin and trashing his opponents. The Kremlin, impressed by these patriotic volunteers, used the engine of capitalism to accelerate the process. He asked Russian advertisers to see if they could offer the same services, dangling fat contracts as a reward. Almost a dozen large companies have committed themselves. And so the 'troll factories' were born. Every day, hundreds of young hipsters would arrive at work at organizations like the innocuously named Internet Research Agency, housed in an ugly neo-Stalinist building in the Primorsky District of St. Petersburg. They would settle into their cramped quarters and get down to business, assuming a series of false identities known as 'sockpuppets'. The job was to write hundreds of posts on social networks a day, with the aim of hijacking conversations and spreading lies, all for the benefit of the Russian government. Those who worked on the 'Facebook desk' targeting foreign audiences were paid twice as much as those targeting domestic audiences. According to documents leaked in 2014, each employee is required to publish newspaper articles 50 times during an average twelve-hour day. Each blogger must maintain six Facebook accounts that publish at least three posts per day and discuss news in groups at least twice a day" (Singer and Brooking, 2018).

Digital sociologists describe the influence of social networks on young people, stating that they create a new reality that is no longer limited by horizontal perception and in which an online argument can and is just as real as an old-fashioned face-to-face physical argument. The difference in an online conflict is that "the whole world" witnesses what is said. 80% of high school conflicts in Chicago schools today are also investigated online. Gang murders in Chicago took on a whole new dimension with the positioning of social media as a primary means of communication. Namely, many conflicts arise due to inappropriate comments and insults precisely because of the published content on social networks (Ibid.). Since the logic of young people in gangs is such that they take insults too much to heart, it leads to personal revenge as well as "protecting the reputation" of the entire gang. Robert Rubin, who leads the advocacy group Advocates for Peace and Urban Unity in Chicago to help (former) gang members, points out that social media is a faceless enemy and believes that words today cause people to die (Public Enemies: Social Media Is Fueling Gang Wars in Chicago).

Boasting of material wealth by posting numerous pictures and depicting the brutal executions of their rivals on social networks is a phenomenon that has taken a regional toll among members of various drug cartels and street gangs throughout Central and South America. The publication of cruel pictures of the dead and the killed around the world's war battlefields has also, unfortunately, become a regular occurrence.

Captology is the study of computers as influential technologies, Fogg points out. This includes the design, research, ethics and analysis of interactive computing products (computers, mobile phones, websites, wireless technology, mobile applications, video games, etc.) created for the purpose of changing human attitudes or behavior. In his book Persuasive Technology, Fogg writes about the elements of captology in question. It is clear that the potential target of persuasion (the person) is not interacting with a human being in a face-to-face encounter. However, the target is not interacting with a "computer," but with a computer interface—typically a computer monitor, a cell phone screen, or a voice "personality" such as Alexa or Siri. The large amount of human interaction that occurs through these same devices reduces the perceptible difference between face-to-face and computer-based persuasive encounters (Fogg, 2003). Fogg pointed out several advantages that computers have over humans in influencer campaigns. Computers are persistent, they enable anonymity, they can access huge data sets, vary in all possible shapes, sizes and quantities in their presentations, widely spread simultaneous attacks on their targets and simply be everywhere. These benefits are really just the beginning. People can also vary their persuasive presentations. However, this variation is based on a particular human's skill in reading the situation and modifying it. Computer adjustments can be based on scientific research, using these large data stores, to select the variation most likely to succeed based on the situation, Fogg claims.

Propaganda and its intentional new forces of social media can produce mild/severe cognitive decline, attention deficits, and mood and anxiety disorders, modify impulsivity or aversion to the enemy, affecting cognitive capital. The use of digital neurotoxicants for mental contamination, broad and deep mental experiments, behavioral optimization, immediate, even temporary influence of affect, management of positive/negative expectations and dimensions of feelings of fear and imaginary/manufactured threats, are the areas of interest of the cognitive battlefield.

The author defines digital neurotoxicants as cognitive substances that can cause harmful effects on the psychosomatic system and ultimately, if necessary, cause obedient action or non-action. The vast number and variety of potentially neurotoxic cognitive substances includes information, misinformation, wrong information, truths, half-truths, non-truths, facts, lies, accurate and inaccurate data/statistics, knowledge and counter-knowledge, and their sources include television, print, radio and digital /social media/networks, statements of officials, politicians, scientists, public figures and ordinary residents, i.e. knowledge stored in books, memories and in the noosphere. The entry of relevant cognitive substances can occur through media, social and personal absorption. In short, digital neurotoxicants are the sublimation of all operational means that affect our cognitive functions, shape public opinion and drive us to action.

Information operations less classically lie, and in disinformation campaigns they increasingly engage in the creation of facts in such a way as to build a comprehensive new reality spread across multiple dimensions and levels. This is possible due to the fact that the evolution of information has reached a level where it has become self-aware of human cognitive limitations, i.e. the lifetime of knowledge is limited to only one human lifetime. History has become an information art canvas on which you can literally paint what you want, say what you want without any responsibility and determine the "truth" that suits you at the moment. When you format all this in the form of a scientific work and include it in the educational system, the process of indoctrination of the new generation begins.

Most are unaware of how the media damages our cognitive highways and our emotional centers until they realize the amount of anger, hatred, and exclusivity they walk with every day. Starting fights and arguments with others even when they can be avoided. Whether it's in the workplace or public/private space, vent your anger towards anyone who disagrees with your views, attitudes and beliefs to the point of physical confrontation. All of this is a consequence of the influence of the media, that is, information activities. With the fact that it should be emphasized that 99% of those it affects have absolutely no tangible material and financial benefits from such behavior, on the contrary, they have exclusive damage. This is where the propaganda paradox lies, when the target works wholeheartedly in favor of his harm and is carried by the most expressed emotions. This and this kind of media predator, which has a new habitat in social networks, feeds on your anger and attention. It brings out the worst in you, inciting violence, first within your consciousness and all the way to the physical part, turning you into a modern zombie who spends several hours a day hanging on various screens, absorbing every particle of light and every electron of transmission. The addiction caused by hatred and the pathological desire to defeat and convince the other is fed by even more hours of exposure to sources of information and participation in various posts and debates. This process of drawing energy, directing and gaining attention accomplishes the given goals of those who control it. The moment you become "addicted", no one can tell you anything new, only you are informed and know all the secrets and have an insight into the state of those who rule from the shadows (not to mention established misinformation). And again we return to that initial absurdity and ambiguities that once again become a breeding ground for misinformation, half-lies and a multitude of interests. The only known remedy (at least to this author) is exclusion from that informational environment and cessation of voluntary participation.

Research

During August and September 2022, a sociological field survey was conducted on a sample of 600 citizens in three large B&H cities, Mostar, Sarajevo and Banja Luka. The aim of the research was to examine the level of knowledge of the public about the terms and topics of information operations, cognitive superiority and related activities. A total of 603 persons participated in the research, of which 48% were male (N = 286), 52% were female (N = 316), while one participant (N = 1) did not state his/her view when prompted.

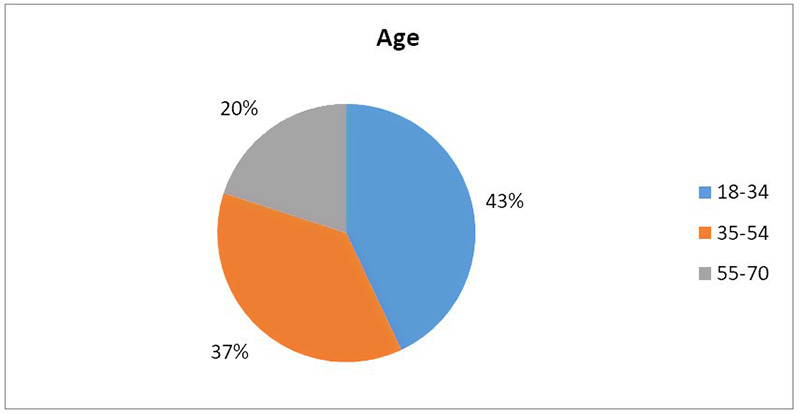

As for the age distribution, 43% (N=259) of the participant are aged 18-34, 37% (N=223) are aged 35-54, and 20% (N=121) are aged 55-70.

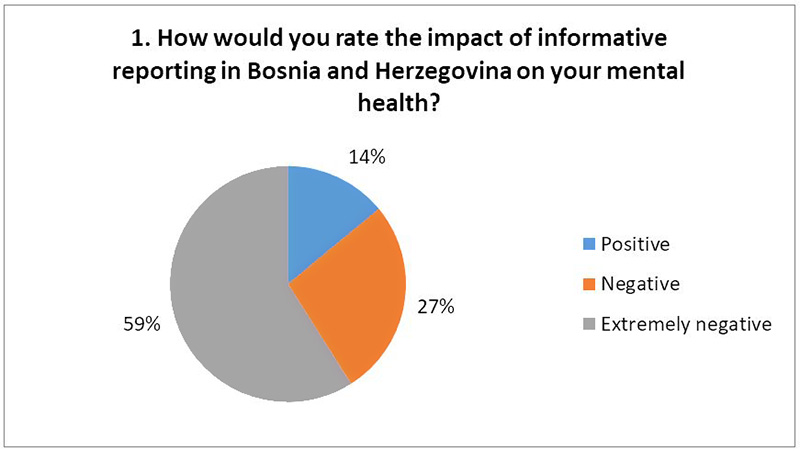

When asked, How would you rate the impact of information reporting in BiH on your mental health, 59% of participants (N=353) stated extremely negatively, 27% (N=166) negatively and 14% (N=84) positively.

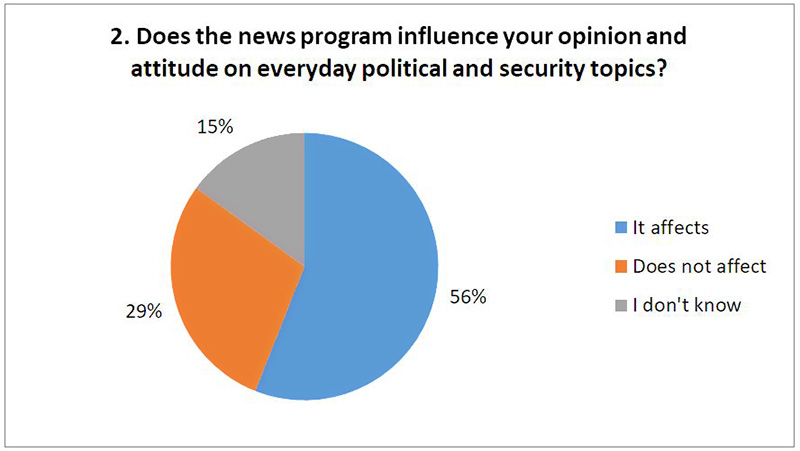

When asked, Does the news program influence your opinion and attitude on everyday political and security topics, 56% (N=334) of the participants declared that it influences, 29% (N=177) that it does not influence and 15% (N=92) that they don't know.

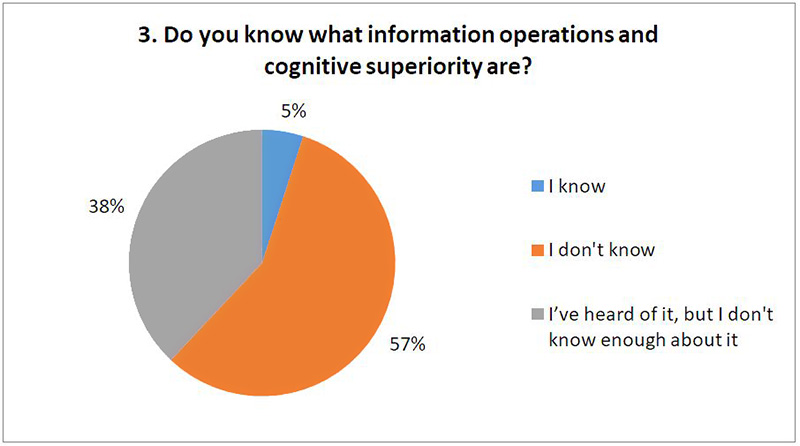

When asked Do you know what information operations and cognitive superiority are, 57% (N=342) of the participants stated that they did not know, 38% (N=228) that they were familiar with it, but that they did not know enough about it, and 5% ( N=33) that it knows.

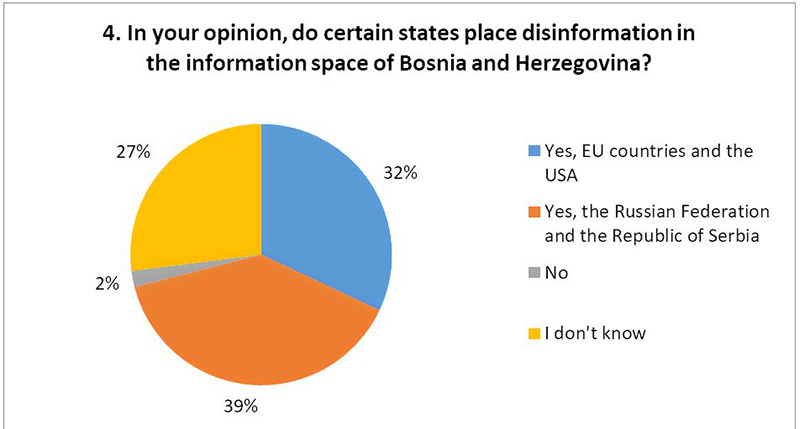

When asked In your opinion, do certain states place disinformation in the information space of Bosnia and Herzegovina, 39% (N=310) of the participants said Yes, emphasizing Russian Federation and the Republic of Serbia, 32% (N=248) said Yes, emphasizing the EU and the USA, 27% (N=210) do not know and 2% (N=19) said No.

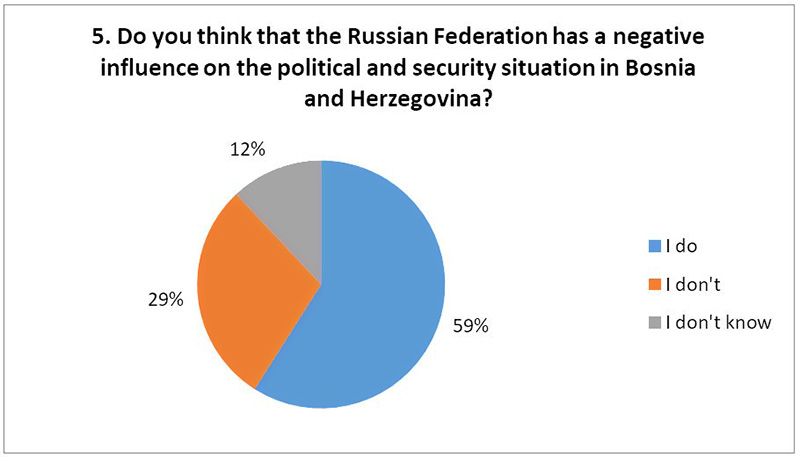

When asked Do you think that the Russian Federation has a negative influence on the political and security situation in Bosnia and Herzegovina, 59% (N=358) of the participants said that they think so, 29% (N=174) that they don't think so and 12% (N=71) that they don't know.

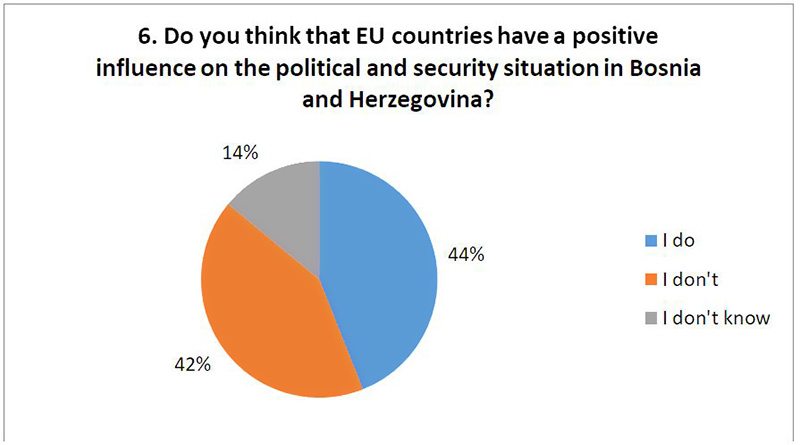

When asked Do you think that EU countries have a positive influence on the political and security situation in Bosnia and Herzegovina, 44% (N=267) of the participants declared that they think so, 42% (N=252) that they do not think so and 14% (N=84) that they do not know.

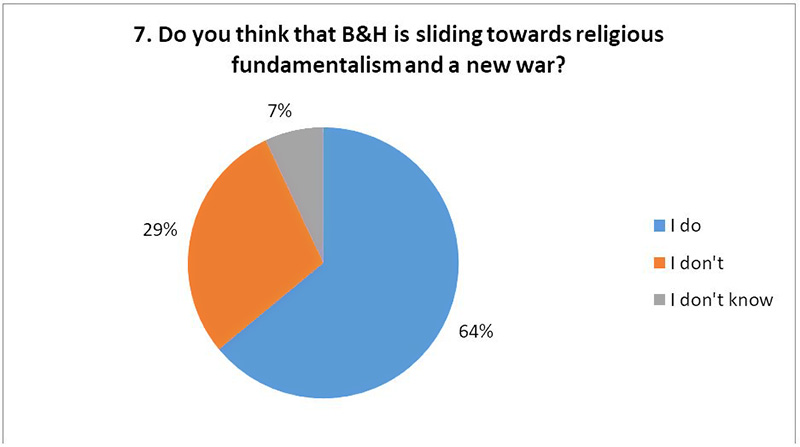

When asked Do you think that B&H is sliding towards religious fundamentalism and a new war, 64% (N=474) of the participants stated that they think so, 29% (N=210) said that they do not think so and 7% (N=50) that they do not know.

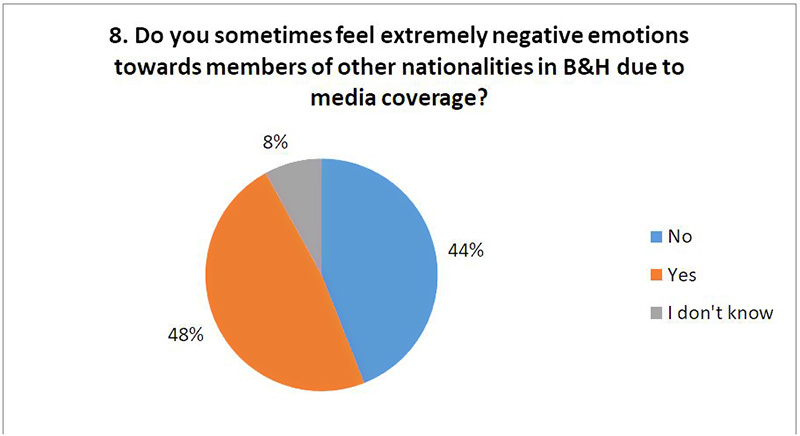

When asked Do you sometimes feel extremely negative emotions towards members of other nationalities in Bosnia and Herzegovina due to media coverage, 48% (N=346) of participants said Yes, 44% (N=318) said No and 8% (N=55) said they don't know.

Conclusion

You can only comprehend the truth yourself, it can hardly be reliably transmitted. For this reason, among other things, it is difficult or almost impossible to find the truth in the media, because it is comprehended directly and immediately, it is very difficult to convey it. Now the question arises, how to comprehend the truth that is a thousand, two or five thousand kilometers away? The answer is very difficult or not at all because as soon as it is transmitted it becomes part of the interpretation, and the interpretation is always subjective and never objective. These are partially overlapping boundaries of reality.

It doesn't even matter if you believe the lie, only if you accept it. If you accept it, it essentially means that you surrender, make yourself available for use by others. The power of lies and misinformation lies in the fact that small mistakes made on the basis of them can turn into catastrophic failures. We live in a strangely polarizing time where not only are there opinions that divide us, but we seem to be getting information from separate sources that then lead to misunderstandings about what are essentially the facts of many phenomena.

Social networks have changed not only the message but also the dynamics of conflicts. The way in which information is accessed, how it is disposed of and manipulated, and how it spreads has taken on a completely new dimension. The whole paradigm of victory and defeat has been fundamentally turned upside down, because now informational activity determines the winners and losers in hyperreality. In this apparent reality, cognitive maneuvers and cognitive strategy have become indispensable processes and fundamental-preparatory actions, and social networks are the blood stream of their influence.

Directing and occupying attention and consequently occupying consciousness are techniques and actions on the way to cognitive superiority. The effects of psychological and algorithmic manipulations through a multitude of viral operations lead to such psychological stress, intellectual stagnation and load in data processing that ultimately the exhausted and information-bombarded mind simply surrenders in the inability to deal with the situation. A mind that is no longer able to think or make critical judgments, but just surrenders and makes itself available. Allegorically speaking, it's like being constantly washed over by huge waves, throwing you off balance again and again to the point of complete physical exhaustion when you simply surrender physically and mentally to a greater, inexorable force.

Social media has reshaped the information war, and the information war has now radically reshaped the perception of reality and consciousness. In this great seismic shift, it is important to understand that cognitive superiority is achieved through the ability to shape a narrative which in turn influences, develops and creates the contours of our understanding of reality (more precisely, the targeted appearance of reality) and thus causes feelings that drive us to act or not to act, withal creating a psychological connection with us on the most personal level, while at the same time acquiring the conditions for the same thing to happen to us over and over again.

The illusion faithfully reflects the unusual authenticity of the relief, clarity and extraordinary similarity with reality, and as such in the human mind it leaves a long-lasting and strong impression on the information-conditioned, and consequently deranged and disturbed human organism. An illusion of reality is created, and no blows are given to illusion because there is not much of a protective mechanism when you are exposed to it. What you can do is not expose yourself to such actions or receive information from these and similar sources. Which brings us to the paradox of the modern information environment, in which the picture is clearer the further away you are, the less emotionally involved and the less information you have, and the more you use your intellect based on objective historical and universal facts.

The results of research in Bosnia and Herzegovina (which was carried out in the immediate pre-election period) showed that the majority of residents do not know the terms cognitive superiority and information operations. On the other hand, research shows that media information operations have an absolutely negative impact on the mental health and mood of citizens and that it shapes their awareness of current political and security issues. 32% of the respondents believe that EU countries and USA place misinformation in the information space of B&H, compared to 39% who think the same about the Russian Federation and the Republic of Serbia. Also, 59% of respondents believe that the Russian Federation has a negative influence on the political and security situation in Bosnia and Herzegovina. What is surprising is that only 44% of respondents believe that the EU has a positive influence on the political and security situation in B&H, compared to 42% who think the opposite. In the oral comments they gave during the survey (out of a quota of 42%), the respondents point out the Federal Republic of Germany as a country that deliberately maintains the status quo in these (state) areas, with the aim of extracting qualified labor from the country. What is worrying is that as many as 64% of the respondents believe that B&H is sliding towards religious fundamentalism and a new war, while at the same time 48% of the respondents express that they sometimes have extremely negative feelings towards members of other nationalities in Bosnia and Herzegovina.

Citate:

APA 6th Edition

Kolobara, R. (2023). Information operations as a means of cognitive superiority - theory and term research in Bosnia and Herzegovina. National security and the future, 24 (2), 41-68. https://doi.org/10.37458/nstf.24.2.3

MLA 8th Edition

Kolobara, Robert. "Information operations as a means of cognitive superiority - theory and term research in Bosnia and Herzegovina." National security and the future, vol. 24, br. 2, 2023, str. 41-68. https://doi.org/10.37458/nstf.24.2.3 Citirano DD.MM.YYYY.

Chicago 17th Edition

Kolobara, Robert. "Information operations as a means of cognitive superiority - theory and term research in Bosnia and Herzegovina." National security and the future 24, br. 2 (2023): 41-68. https://doi.org/10.37458/nstf.24.2.3

Harvard

Kolobara, R. (2023). 'Information operations as a means of cognitive superiority - theory and term research in Bosnia and Herzegovina', National security and the future, 24(2), str. 41-68. https://doi.org/10.37458/nstf.24.2.3

Vancouver

Kolobara R. Information operations as a means of cognitive superiority - theory and term research in Bosnia and Herzegovina. National security and the future [Internet]. 2023 [pristupljeno DD.MM.YYYY.];24(2):41-68. https://doi.org/10.37458/nstf.24.2.3

IEEE

R. Kolobara, "Information operations as a means of cognitive superiority - theory and term research in Bosnia and Herzegovina", National security and the future, vol.24, br. 2, str. 41-68, 2023. [Online]. https://doi.org/10.37458/nstf.24.2.3

Literature

1. Aspesi, C., & Brand, A. (2020.) In pursuit of open science, open access is not enough. Science, 368, 574-577.

2. Ariely, D. (2009.) Predictably irrational. New York: HarperCollins.

3. Arquilla, John - Borer A. Douglas (2007.) Information Strategy and Warfare - A guide to theory and practice. Routledge, New York and London.

4. Armistead, Leigh (2007.) INFORMATION WARFARE - Separating Hype from Reality; Potomac Books, Inc. Washington, D.C.

5. Brose, C. (2020.) The kill chain: Defending America in the future of high-tech warfare. New York: Hachette Books.

6. Berger, J. (2020.) The catalyst: How to change anyone's mind. New York: Simon & Schuster.

7. Chairman of the Joint Chiefs of Staff (2017.) Joint planning, Joint Publications 5-0. Waschington, DC: Chairman of the Joint Chiefs of Staff.

8. Chinese Academy of Military Science (2018.) The science of military strategy (2013). Lexington: 4th Watch Publishing Co. (4.W. Co, Tran.).

9. Clausewitz, C. v. (1993.) On War. New York: Alfred A Knopf, Inc.. (M. Howard & P.Paret, Trans.).

10. Centola, D. (2018) How behavior spreads. Princeton: The Princeton University Press.

11. Christoper, Paul (2008.) Information operations - Doctrine and Pratice (A Reference Handbook), Contemporary Military, Strategic, and Security Issues; Praeger Security International; Wetport, Connecticut, London.

12. Donovan, J. (2019.) Drafted into the meme wars. MIT Technology Review (pp. 48-51).

13. Dean S. Hartley III; Kenneth O. Jobson (2021.) Cognitive Superiority – Information to Power. Springer Nature Switzerland AG: Cham, Switzerland.

14. Gladwell, M. (2019.) Talking to strangers: What we should know about the people we don't know. New York: Little, Brown and Company.

15. Guadagno, R.E. & Guttieri, K. (2019.) Fake news and information warfare: An examination of the political and psychological processes from the digital sphere to the real world. In I.E. Chiluwa & S.A. Samoilenko (Eds.) Handbook of research on deception, fake news and misinformation online (pp. 167-191). IGI Global.

16. Fogg, B.J., & Eckles, D. (2014.) Mobile persuasion. Stanford: Stanford Captology Media.

17. Levitin, D.J. (2016.) Weaponized lies. New York: Dutton.

18. 80 percent of the fights: Ben Austen, „Public Enemies: Social Media Is Fueling Gang Wars in Chicago“, Wired, 17.09.2013., https://www.wired.com/2013/09/gangs-of-social-media/.

19. Joseph L. Strange and Richard Iron (2004.) „Center of Gravity: What Clausewitz Relly Meant“ (report, National Defense University, Washington, DC) http://www.dtic.mil/tr/fulltext/u2/a520980.pdf.

20. Mercier, H. (2020.) Not born yesterday: The science of who we trust and what we believe. Princeton: Princeton University Press.

21. Manuel Castells, The Information Age: Economy, Society and Culture, vol.1, The Rise of the Networked Society, quoted in Paul DiMagio et al., „Social Implications of the Internet“, Annual Review of Sociology 27 (2001):309.

22. Kolobara, Robert (2017.) IDEOLOGIJA MOĆI – informacija kao sredstvo, Udruženje za društveni razvoj i prevenciju kriminaliteta - UDRPK, Mostar.

23. Kelly, K. (2016.) The inevitable. New York: Viking Press.

24. Kahn, J. (2020.) The quest for human-level A.I. Fortune (pp.62-67)

25. Karp, A. (2020.) CNBC intervju sa izvršnim direktorom Palantir-a Alex Karpom na konferenciju u Davosu. YouTube: https://www.youtube.com/watch?v=Mel4BWVk5-k

26. Kello, L. (2017.) The virtual weapon and international order. New Haven: Yale University Press.

27. Lee, K.-F- (2018.) AI superpowers: China, silicon valley, and the new world order. Boston: Houghton Mifflin Harcourt.

28. Singer, P.W., & Brooking, E.T. (2018.) LikeWar: The weaponization of social media. Boston: Houghton Mifflin Harcourt.

29. Seabrook, J., (2019.) The next word. The New Yorker (pp. 52-63).

30. Pillsbury, M. (2015.) The hundred-year marathon: China's secret strategy to replace America as the global superpower. New York: St. Martin's Press.

31. Rothrock, R.A. (2018.) Digital resilience: Is your company ready for the next cyber threat? New York: American Management Association.

32. McFate, S. (2019.) The new rules of war: Victory in the age of disorder. New York: William Morrow.

33. Visner, S.S. (2018.) Challenging our assumptions: Some hard questions for the operations research community. 11.12.2018. Mors Emerging Techniques Forum: http://www.mors.org/Events/Emerging-Techniques-Forum/2018-ETF-Presentations

34. Temming, M. (2018.) Detecting fake news. Science News (pp. 22-26).

35. Walton, G. (2016.) Unique histories from the 18th and 19th centuries. Preuzeto 07.07.2018. sa https://www.geriwalton.com/words-said-during-french-revolution/